Understanding Logistic Regression Cost Function: Key to Accurate Binary Classification

DL(Part 7)

📚Chapter2: Logistic Regression as a Neural Network

If you want to read more articles about Deep Learning, don’t forget to stay tuned :) click here.

Description

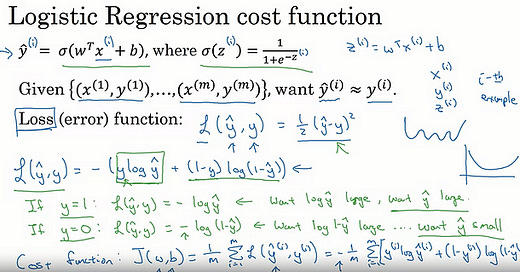

In the field of machine learning, logistic regression is a popular algorithm used for binary classification problems. One of the key components of logistic regression is the cost function, which quantifies the difference between the predicted and actual outcomes. This blog post will provide a comprehensive explanation of the logistic regression cost function, its importance, and how it is calculated.

Section 1: Introduction to Logistic Regression

Before delving into the details of the cost function, let’s first understand what logistic regression is and its significance in machine learning. Logistic regression is a supervised learning algorithm that is primarily used for binary classification tasks. It predicts the probability of an instance belonging to a particular class, based on the input features.

Section 2: The Need for a Cost Function

In logistic regression, the goal is to find the optimal parameters that minimize the difference between predicted probabilities and actual class labels. This is where the cost function comes into play. The cost function helps us measure how well our model is performing and guides us toward finding the optimal parameters. In the previous tutorial, you saw the logistic regression model to train the parameters W and B, of a logistic regression model. You need to define a cost function, let’s take a look at the cost function.

Section 3: Definition of the Logistic Regression Cost Function

The logistic regression cost function, also known as the log loss or cross-entropy loss, is a measure of the error between the predicted probabilities and true class labels.The logistic regression cost function is also known as the cross-entropy loss function or the log loss function. It is a convex function, which means that it has a single global minimum. This makes it easier to optimize using gradient descent. You can use to train logistic regression to recap this is what we have defined from the previous tutorial.

Section 4: Intuition Behind the Cost Function

So you output Y hat is sigmoid of W transports experts be where sigmoid of Z is as defined here. So to learn parameters for your model, you’re given a training set of training examples and it seems natural that you want to find parameters W and B. So that at least on the training set, the outputs you have the predictions you have on the training set, which I will write as y hat I that that will be close to the ground troops labels y I that you got in the training set. So to fill in a little bit more detail for the equation on top, we had said that y hat as defined at the top for a training example X. And of course for each training example, we’re using these superscripts with round brackets with parentheses to index into different train examples. Your prediction on training example I which is y hat I is going to be obtained by taking the sigmoid function and applying it to W transposed X I the input for the training example plus B. And you can also define Z I as follows where Z I is equal to, you know, W transport Z I plus B. So throughout this course we’re going to use this notational convention that the super strip parentheses I refers to data be an X or Y or Z. Or something else associated with the I’ve training example associated with the life example, okay, that’s what the superscript I in parenthesis means.

Now let’s see what loss function or an error function we can use to measure how well our album is doing. One thing you could do is define the loss when your algorithm outputs y hat and the true label is y to be maybe the square error or one half a square error. It turns out that you could do this, but in logistic regression people don’t usually do this. Because when you come to learn the parameters, you find that the optimization problem, which we’ll talk about later becomes non convex. So you end up with optimization problem, you’re with multiple local optima. So great in Gradient dissent, may not find a global optimum, if you didn’t understand the last couple of comments, don’t worry about it, Ww’ll get to it in a later video. But the intuition to take away is that dysfunction L called the loss function is a function will need to define to measure how good our output y hat is when the true label is y. And squared era seems like it might be a reasonable choice except that it makes great in descent not work well. So in logistic regression were actually define a different loss function that plays a similar role as squared error but will give us an optimization problem that is convex. And so we’ll see in a later video becomes much easier to optimize, so what we use in logistic regression is actually the following loss function, which I’m just going right out here is negative. y log y hat plus 1 minus y log 1 minus, y hat here’s some intuition on why this loss function makes sense. Keep in mind that if we’re using squared error then you want to square error to be as small as possible. And with this logistic regression, lost function will also want this to be as small as possible. To understand why this makes sense, let’s look at the two cases, in the first case let’s say y is equal to 1, then the loss function. y hat comma Y is just this first term right in this negative science, it’s negative log y hat if y is equal to 1. Because if y equals 1, then the second term 1 minus Y is equal to 0, so this says if y equals 1, you want negative log y hat to be as small as possible. So that means you want log y hat to be large to be as big as possible, and that means you want y hat to be large. But because y hat is you know the sigmoid function, it can never be bigger than one. So this is saying that if y is equal to 1, you want, y hat to be as big as possible, but it can’t ever be bigger than one. So saying you want, y hat to be close to one as well, the other case is Y equals zero, if Y equals 0. Then this first term in the loss function is equal to 0 because y equals 0, and in the second term defines the loss function. So the loss becomes negative Log 1 minus y hat, and so if in your learning procedure you try to make the loss function small. What this means is that you want, Log 1 minus y hat to be large and because it’s a negative sign there. And then through a similar piece of reasoning, you can conclude that this loss function is trying to make y hat as small as possible, and again, because y hat has to be between zero and 1. This is saying that if y is equal to zero then your loss function will push the parameters to make y hat as close to zero as possible. Now there are a lot of functions with roughly this effect that if y is equal to one, try to make y hat large and y is equal to zero or try to make y hat small. We just gave here in green a somewhat informal justification for this particular loss function we will provide an optional video later to give a more formal justification for y. In logistic regression, we like to use the loss function with this particular form. Finally, the last function was defined with respect to a single training example. It measures how well you’re doing on a single training example, I’m now going to define something called the cost function, which measures how are you doing on the entire training set. So the cost function j, which is applied to your parameters W and B, is going to be the average, really one of the m of the sun of the loss function apply to each of the training examples. In turn, we’re here, y hat is of course the prediction output by your logistic regression algorithm using, you know, a particular set of parameters W and B. And so just to expand this out, this is equal to negative one of them, some from I equals one through of the definition of the lost function above. So this is y I log y hat I plus 1 minus Y, I log 1minus y hat I I guess it can put square brackets here. So the minus sign is outside everything else, so the terminology I’m going to use is that the loss function is applied to just a single training example. Like so and the cost function is the cost of your parameters, so in training your logistic regression model, we’re going to try to find parameters W and B. That minimize the overall cost function J, written at the bottom. So you’ve just seen the setup for the logistic regression algorithm, the loss function for training example, and the overall cost function for the parameters of your algorithm. It turns out that logistic regression can be viewed as a very, very small neural network. In the next tutorial, we’ll go over that so you can start gaining intuition about what neural networks do. So with that let’s go on to the next video about how to view logistic regression as a very small neural network.

Section 5: Properties of the Cost Function

The logistic regression cost function possesses several desirable properties that make it suitable for training binary classifiers. These properties include:

1. Convexity

The cost function is convex, meaning it has a single global minimum. This allows us to use optimization algorithms like gradient descent to find the optimal parameters.

2. Continuous and Differentiable

The cost function is continuous and differentiable, which enables us to use gradient-based optimization techniques to minimize it efficiently.

3. Monotonicity

As we update the model parameters to minimize the cost function, it leads to an increase in model performance and a decrease in prediction errors.

Section 6: Cost Function implementation in Python

import numpy as np

def logistic_regression_cost_function(y_true, y_pred):

"""Computes the logistic regression cost function.

Args:

y_true: A numpy array of the true labels.

y_pred: A numpy array of the predicted labels.

Returns:

A numpy array of the cost function values. """

cost = -np.mean(y_true * np.log(y_pred) + (1 - y_true) * np.log(1 - y_pred))

return cost

# Example usage:

y_true = np.array([1, 0, 1, 0])

y_pred = np.array([0.8, 0.2, 0.7, 0.3])

cost = logistic_regression_cost_function(y_true, y_pred)

print(cost)

#output

0.2899092476264711The lower the cost function value, the better the model is fitting the training data.

If you would like to know more, have a read of:

Logistic Regression: Cost Function

Logistic Regression: Testing

Logistic Regression: Training

Section 8: Conclusion

In this blog post, we have explored the logistic regression cost function in detail. We have seen how it measures the error between predicted probabilities and true class labels, and why it is crucial for training binary classifiers. We have also discussed its properties, optimization algorithms for minimizing it, and regularization techniques to enhance model performance. Understanding the logistic regression cost function is essential for effective model training and evaluation in machine learning applications.

Please Follow and 👏 Subscribe for the story courses teach to see latest updates on this story

🚀 Elevate Your Data Skills with Coursesteach! 🚀

Ready to dive into Python, Machine Learning, Data Science, Statistics, Linear Algebra, Computer Vision, and Research? Coursesteach has you covered!

🔍 Python, 🤖 ML, 📊 Stats, ➕ Linear Algebra, 👁️🗨️ Computer Vision, 🔬 Research — all in one place!

Join the full course for More Learning!🌟

Neural Networks and Deep Learning course

Improving Deep Neural Network course

Stay tuned for our upcoming articles because we reach end to end ,where we will explore specific topics related to Deep Learning in more detail!

We offer following serveries:

We offer the following options:

Enroll in my Deep Learning course: You can sign up for the course at this link. The course is designed in a blog-style format and progresses from basic to advanced levels.

Access free resources: I will provide you with learning materials, and you can begin studying independently. You are also welcome to contribute to our community — this option is completely free.

Online tutoring: If you’d prefer personalized guidance, I offer online tutoring sessions, covering everything from basic to advanced topics.

Contribution: We would love your help in making coursesteach community even better! If you want to contribute in some courses , or if you have any suggestions for improvement in any coursesteach content, feel free to contact and follow.

Together, let’s make this the best AI learning Community! 🚀